diff --git a/mlir/docs/Dialects/Linalg.md b/mlir/docs/Dialects/Linalg.md

--- a/mlir/docs/Dialects/Linalg.md

+++ b/mlir/docs/Dialects/Linalg.md

@@ -6,12 +6,12 @@

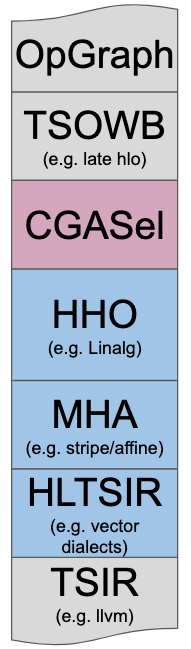

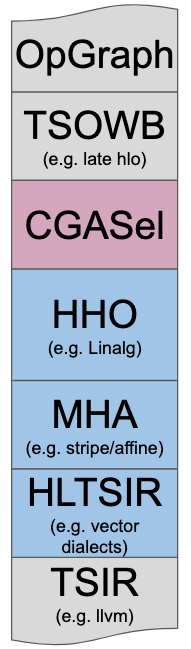

-Linalg is designed to solve the High-level Hierarchical Optimization

-(HHO box) in MLIR and to interoperate nicely within a

-*Mixture Of Expert Compilers* environment (i.e. the *CGSel* box).

+Linalg is designed to solve the High-level Hierarchical Optimization (HHO box)

+in MLIR and to interoperate nicely within a *Mixture Of Expert Compilers*

+environment (i.e. the *CGSel* box).

-The [Rationale Document](../Rationale/RationaleLinalgDialect.md)

-goes into significantly more design and architectural decision details.

+The [Rationale Document](../Rationale/RationaleLinalgDialect.md) goes into

+significantly more design and architectural decision details.

## Set of Key Transformations

@@ -20,51 +20,72 @@

`linalg.generic` OpInterface and avoid the pitfall of relying on hardcoded

one-off op knowledge.

-The textual form description of these transformations is left for future

-work. Still, it is useful to at least the key transformations that are

-performed on the Linalg IR and that have influenced its design:

-1. Progressive Buffer Allocation.

-1. Parametric Tiling.

-1. Promotion to Temporary Buffer in Fast Memory.

-1. Tiled Producer-Consumer Fusion with Parametric Tile-And-Fuse.

-1. Map to Parallel and Reduction Loops and Hardware.

-1. Vectorization: Rewrite in Vector Form.

-1. Lower to Loops (Affine, Generic, and Parallel).

-1. Lower to Library Calls or Special Instructions, Intrinsics or ISA.

-1. Partially Lower to Iterations Over a Finer-Grained Linalg Op.

+The textual form description of these transformations is left for future work.

+Still, it is useful to at least the key transformations that are performed on

+the Linalg IR and that have influenced its design: 1. Progressive Buffer

+Allocation. 1. Parametric Tiling. 1. Promotion to Temporary Buffer in Fast

+Memory. 1. Tiled Producer-Consumer Fusion with Parametric Tile-And-Fuse. 1. Map

+to Parallel and Reduction Loops and Hardware. 1. Vectorization: Rewrite in

+Vector Form. 1. Lower to Loops (Affine, Generic, and Parallel). 1. Lower to

+Library Calls or Special Instructions, Intrinsics or ISA. 1. Partially Lower to

+Iterations Over a Finer-Grained Linalg Op.

## High-Level Description of Linalg Ops

-Linalg takes at least some inspiration from all previously [listed prior

-art](#prior_art). The design enables the definition of ***CustomOps*** with

-generic properties that enable [key transformations](#key_transformations),

-including lowering to scalar load/store and other operations or to external

-library calls and intrinsics.

-These ops can have ***either tensor or buffer operands***, subject to

-[conventions and limitations](#tensors_and_buffers).

+Linalg takes at least some inspiration from all previously

+[listed prior art](#prior_art). The design enables the definition of

+***CustomOps*** with generic properties that enable

+[key transformations](#key_transformations), including lowering to scalar

+load/store and other operations or to external library calls and intrinsics.

+

+These ops can have ***either tensor or buffer*** as both input and output

+operands. Output tensors operands serve the purpose of providing a unifying

+abstraction and give a shape to the result tensors as described in the discourse

+discussion

+[Linalg and Shapes](https://llvm.discourse.group/t/linalg-and-shapes/2421).

+

+Output tensors can come in 2 flavors and are always associated with a

+corresponding op result:

+

+1. an "init tensor" output value which provides an initial value for a tensor

+ that is creating by iteratively updating the result (also called

+ "destructive updates"). Such tensor is always materialized in some form. If

+ enough fusion occurs it may end up being materialized only as a

+ register-level SSA value. It is expected (but not required) that the

+ destructive update pattern can be rewritten as an inplace update on buffers.

+

+2. a "shape-only" tensor output value which is write-only and only serves the

+ purpose of carrying shape information to lower levels of abstraction. In the

+ future this will be replaced by an appropriate shape type when it is

+ available as a builtin type (see the discourse discussion

+ [Linalg and Shapes](https://llvm.discourse.group/t/linalg-and-shapes/2421)

+ for more details).

### Payload-Carrying Ops

-Linalg defines two payload carrying operations that implement the [structured ops](

-https://docs.google.com/presentation/d/1P-j1GrH6Q5gLBjao0afQ-GfvcAeF-QU4GXXeSy0eJ9I/edit#slide=id.p

-) abstraction on tensors and buffers. This is architected as two generic operations

-`linalg.generic` (resp. `linalg.indexed_generic`) that can express custom

-operations with *index-free semantics* (resp. *indexing semantics*).

-The properties of these generic ops are the result of applying the

-guiding principles described in the [Rationale Document](../Rationale/RationaleLinalgDialect.md).

-They are listed next, with a brief example and discussion for each.

+

+Linalg defines two payload carrying operations that implement the

+[structured ops](https://docs.google.com/presentation/d/1P-j1GrH6Q5gLBjao0afQ-GfvcAeF-QU4GXXeSy0eJ9I/edit#slide=id.p)

+abstraction on tensors and buffers. This is architected as two generic

+operations `linalg.generic` (resp. `linalg.indexed_generic`) that can express

+custom operations with *index-free semantics* (resp. *indexing semantics*). The

+properties of these generic ops are the result of applying the guiding

+principles described in the

+[Rationale Document](../Rationale/RationaleLinalgDialect.md). They are listed

+next, with a brief example and discussion for each.

#### Property 1: Input and Output Operands Define The Iteration Space

+

A `linalg.generic` op fully *derives* the specification of its iteration space

-from its operands.

-The property enforces that a localized IR element (the op) *has* all the information

-needed to synthesize the control-flow required to iterate over its operands,

-according to their type. This notion of IR localization bears some resemblance

-to [URUK](http://icps.u-strasbg.fr/~bastoul/research/papers/GVBCPST06-IJPP.pdf).

+from its operands. The property enforces that a localized IR element (the op)

+*has* all the information needed to synthesize the control-flow required to

+iterate over its operands, according to their type. This notion of IR

+localization bears some resemblance to

+[URUK](http://icps.u-strasbg.fr/~bastoul/research/papers/GVBCPST06-IJPP.pdf).

-Consider the following fully specified `linalg.generic` example.

-Here, the first operand is a `memref` of `f32` scalar elements that

-has an ordinary identity layout, and the second one is a `memref` of

-4-element vectors with a 2-strided, 1-offset layout.

+Consider the following fully specified `linalg.generic` example. Here, the first

+operand is a `memref` of `f32` scalar elements that has an ordinary identity

+layout, and the second one is a `memref` of 4-element vectors with a 2-strided,

+1-offset layout.

```mlir

// File name: example1.mlir

@@ -117,39 +138,41 @@

The property participates in simplifying analyses and transformations. For

instance, it guarantees no out-of bounds access can occur by construction

-(assuming dynamic operand dimensions agree with each other, which is the

-purpose of the `assert` runtime check).

+(assuming dynamic operand dimensions agree with each other, which is the purpose

+of the `assert` runtime check).

-Before lowering to loop form, loop induction variables and iterators are *not yet

-materialized*. This is a necessary property if we want an abstraction that

+Before lowering to loop form, loop induction variables and iterators are *not

+yet materialized*. This is a necessary property if we want an abstraction that

works on both tensor values and buffers because ***values don’t escape

loops/nesting***.

The main implications are that:

-1. The semantics of the ops are *restricted to operate on structured data

-types*, on which we can define an iterator.

-2. This does not model arbitrary code with side-effects.

+

+1. The semantics of the ops are *restricted to operate on structured data

+ types*, on which we can define an iterator.

+

+2. This does not model arbitrary code with side-effects.

We do not think these are serious limitations in practice because MLIR is all

-about mixing different levels of abstractions in the same IR. As long as

-Linalg can progressively lower to the next level of abstraction, it can also

-be just bypassed for things that do not fit.

+about mixing different levels of abstractions in the same IR. As long as Linalg

+can progressively lower to the next level of abstraction, it can also be just

+bypassed for things that do not fit.

At the same time, conditioning op semantics on structured data types is a very

promising path towards extensibility to non-dense tensors as experience with

LIFT abstractions for

-[sparse](https://www.lift-project.org/publications/2016/harries16sparse.pdf)

-and [position-dependent

-arrays](https://www.lift-project.org/publications/2019/pizzuti19positiondependentarrays.pdf),

+[sparse](https://www.lift-project.org/publications/2016/harries16sparse.pdf) and

+[position-dependent arrays](https://www.lift-project.org/publications/2019/pizzuti19positiondependentarrays.pdf),

as well as [TACO](http://tensor-compiler.org/), has shown.

#### Property 2: Reversible Mappings Between Control and Data Structures

+

A `linalg.generic` *defines* the mapping between the iteration space (i.e. the

loops) and the data.

-Consider the following fully specified `linalg.generic` example.

-Here, the first `memref` is a 2-strided one on both of its dimensions,

-and the second `memref` uses an identity layout.

+Consider the following fully specified `linalg.generic` example. Here, the first

+`memref` is a 2-strided one on both of its dimensions, and the second `memref`

+uses an identity layout.

```

// File name: example2.mlir

@@ -176,195 +199,165 @@

```

The property "*Reversible Mappings Between Control and Data Structures*" is

-materialized by a lowering into a form that will resemble:

-```

-// Run: mlir-opt example2.mlir -allow-unregistered-dialect -convert-linalg-to-loops

-#map0 = affine_map<(d0, d1) -> (d0 * 2 + d1 * 2)>

+materialized by a lowering into a form that will resemble: ``` // Run: mlir-opt

+example2.mlir -allow-unregistered-dialect -convert-linalg-to-loops

-func @example(%arg0: memref<8x?xf32, #map0>, %arg1: memref>) {

- %c8 = constant 8 : index

- %c0 = constant 0 : index

- %c1 = constant 1 : index

- %0 = dim %arg0, %c1 : memref<8x?xf32, #map0>

- scf.for %arg2 = %c0 to %0 step %c1 {

- scf.for %arg3 = %c0 to %c8 step %c1 {

- %1 = load %arg0[%arg3, %arg2] : memref<8x?xf32, #map0>

- %2 = load %arg1[%arg3] : memref>

- %3 = "some_compute"(%1, %2) : (f32, vector<4xf32>) -> vector<4xf32>

- store %3, %arg1[%arg3] : memref>

- }

- }

- return

-}

-```

+# map0 = affine_map<(d0, d1) -> (d0 * 2 + d1 * 2)>

-This mapping needs to be reversible because we want to be

-able to go back and forth between the two and answer questions such as:

-- Given a subset of the iteration space, what subset of data does it read and

-write?

-- Given a subset of data read or written, what subset of the iteration space

-is responsible for this read or write?

+func @example(%arg0: memref<8x?xf32, #map0>, %arg1: memref>) {

+%c8 = constant 8 : index %c0 = constant 0 : index %c1 = constant 1 : index %0 =

+dim %arg0, %c1 : memref<8x?xf32, #map0> scf.for %arg2 = %c0 to %0 step %c1 {

+scf.for %arg3 = %c0 to %c8 step %c1 { %1 = load %arg0[%arg3, %arg2] :

+memref<8x?xf32, #map0> %2 = load %arg1[%arg3] : memref> %3 =

+"some_compute"(%1, %2) : (f32, vector<4xf32>) -> vector<4xf32> store %3,

+%arg1[%arg3] : memref> } } return } ```

+

+This mapping needs to be reversible because we want to be able to go back and

+forth between the two and answer questions such as: - Given a subset of the

+iteration space, what subset of data does it read and write? - Given a subset of

+data read or written, what subset of the iteration space is responsible for this

+read or write?

Answering these `2` questions is one of the main analyses that Linalg uses to

implement transformations such as tiling, tiled producer-consumer fusion, and

promotion to temporary buffers in fast memory.

-In the current implementation, `linalg.generic` uses a list of [AffineMaps](https://mlir.llvm.org/docs/LangRef/#affinemap-attribute) (see the `#indexing_maps` attribute in the previous examples).

-This is a pragmatic short-term solution, but in the longer term note that

-this property could be even evaluated dynamically, similarly to

-inspector-executor algorithms.

+In the current implementation, `linalg.generic` uses a list of

+[AffineMaps](https://mlir.llvm.org/docs/LangRef/#affinemap-attribute) (see the

+`#indexing_maps` attribute in the previous examples). This is a pragmatic

+short-term solution, but in the longer term note that this property could be

+even evaluated dynamically, similarly to inspector-executor algorithms.

#### Property 3: The Type Of Iterators is Defined Explicitly

+

A `linalg.generic` op fully *declares* the type of its iterators. This

information is used in transformations.

These properties are derived from established practice in the field and mirror

-the properties from Ken Kennedy's [Optimizing Compilers for Modern Architectures](

-https://www.elsevier.com/books/optimizing-compilers-for-modern-architectures/allen/978-0-08-051324-9).

-The key idea of legality of loop transformations expressed by Kennedy is

-that ***the lexicographic order of all dependence vectors must be

-preserved***.

+the properties from Ken Kennedy's

+[Optimizing Compilers for Modern Architectures](https://www.elsevier.com/books/optimizing-compilers-for-modern-architectures/allen/978-0-08-051324-9).

+The key idea of legality of loop transformations expressed by Kennedy is that

+***the lexicographic order of all dependence vectors must be preserved***.

This can be better captured directly at the loop level thanks to specific

-iterator types, among which:

-*parallel*, *reduction*, *partition*, *permutable/monotonic*, *sequential*,

-*dependence distance*, ...

+iterator types, among which: *parallel*, *reduction*, *partition*,

+*permutable/monotonic*, *sequential*, *dependence distance*, ...

-These types are traditionally the result of complex dependence analyses and

-have been referred to as "*bands*" in the polyhedral community (e.g. *parallel

+These types are traditionally the result of complex dependence analyses and have

+been referred to as "*bands*" in the polyhedral community (e.g. *parallel

bands*, *permutable bands*, etc, in

[ISL](https://en.wikipedia.org/wiki/Integer_set_library) schedule tree

parlance).

-Specifying the information declaratively in a `linalg.generic` allows

-conveying properties that may be hard (or even impossible) to derive from

-lower-level information. These properties can be brought all the way to the

-moment when they are useful for transformations, used and then discarded.

+Specifying the information declaratively in a `linalg.generic` allows conveying

+properties that may be hard (or even impossible) to derive from lower-level

+information. These properties can be brought all the way to the moment when they

+are useful for transformations, used and then discarded.

Additionally, these properties may also be viewed as a contract that the

-frontend/user guarantees and that the compiler may take advantage of. The

-common example is the use of data-dependent reduction semantics for

-specifying histogram computations. If the frontend has additional knowledge

-that proper atomic operations are available, it may be better to specify

-parallel semantics and use the special atomic in the computation region.

+frontend/user guarantees and that the compiler may take advantage of. The common

+example is the use of data-dependent reduction semantics for specifying

+histogram computations. If the frontend has additional knowledge that proper

+atomic operations are available, it may be better to specify parallel semantics

+and use the special atomic in the computation region.

At this time, Linalg only has an explicit use for *parallel* and *reduction*

loops but previous experience shows that the abstraction generalizes.

#### Property 4: The Compute Payload is Specified With a Region

-A `linalg.generic` op has a compute payload that is fully generic thanks to

-the use of

+

+A `linalg.generic` op has a compute payload that is fully generic thanks to the

+use of

[Regions](https://github.com/llvm/llvm-project/blob/58265ad42a90ae8905be6a447cb42e53529a54a0/mlir/docs/LangRef.md#regions).

-The region takes as arguments the scalar elemental types of the tensor or

-buffer operands of the `linalg.generic`. For flexibility and ability to match

-library calls, additional special values may be passed. For instance, a

-`linalg.fill` operation takes a buffer and an additional scalar value.

+The region takes as arguments the scalar elemental types of the tensor or buffer

+operands of the `linalg.generic`. For flexibility and ability to match library

+calls, additional special values may be passed. For instance, a `linalg.fill`

+operation takes a buffer and an additional scalar value.

-At this time there are no additional restrictions to the region

-semantics. This is meant to allow the exploration of various design tradeoffs

-at the intersection of regions and iterator types.

-In particular, the frontend is responsible for the semantics of iterator types

-to correspond to the operations inside the region: the region can capture

-buffers arbitrarily and write into them. If this conflicts with some parallel

-iterator requirement, this is undefined behavior.

+At this time there are no additional restrictions to the region semantics. This

+is meant to allow the exploration of various design tradeoffs at the

+intersection of regions and iterator types. In particular, the frontend is

+responsible for the semantics of iterator types to correspond to the operations

+inside the region: the region can capture buffers arbitrarily and write into

+them. If this conflicts with some parallel iterator requirement, this is

+undefined behavior.

-Previous examples already elaborate compute payloads with an unregistered function `"some_compute"`. The following code snippet shows what the result will be when using a concrete operation `addf`:

-```

-// File name: example3.mlir

-#indexing_maps = [

- affine_map<(i, j) -> (i, j)>,

- affine_map<(i, j) -> (i, j)>,

- affine_map<(i, j) -> (i, j)>

-]

-#attrs = {

- args_in = 2,

- args_out = 1,

- indexing_maps = #indexing_maps,

- iterator_types = ["parallel", "parallel"]

-}

-func @example(%A: memref, %B: memref, %C: memref) {

- linalg.generic #attrs %A, %B, %C {

- ^bb0(%a: f32, %b: f32, %c: f32):

- %d = addf %a, %b : f32

- linalg.yield %d : f32

- }: memref, memref, memref

- return

-}

-```

+Previous examples already elaborate compute payloads with an unregistered

+function `"some_compute"`. The following code snippet shows what the result will

+be when using a concrete operation `addf`: ``` // File name: example3.mlir

-This function basically element-wise adds up two matrices (`%A` and `%B`) and stores the result into another one (`%C`).

+# indexing_maps = [

-The property "*The Compute Payload is Specified With a Region*" is

-materialized by a lowering into a form that will resemble:

-```

-// Run: mlir-opt example3.mlir -convert-linalg-to-loops

-#indexing_maps = [

- affine_map<(i, j) -> (i, j)>,

- affine_map<(i, j) -> (i, j)>,

- affine_map<(i, j) -> (i, j)>

-]

-#attrs = {

- args_in = 2,

- args_out = 1,

- indexing_maps = #indexing_maps,

- iterator_types = ["parallel", "parallel"]

-}

-func @example(%A: memref, %B: memref, %C: memref) {

- linalg.generic #attrs %A, %B, %C {

- ^bb0(%a: f32, %b: f32, %c: f32):

- %d = addf %a, %b : f32

- linalg.yield %d : f32

- }: memref, memref, memref

- return

-}

-```

+affine_map<(i, j) -> (i, j)>, affine_map<(i, j) -> (i, j)>, affine_map<(i, j) ->

+(i, j)> ]

+

+# attrs = {

+

+args_in = 2, args_out = 1, indexing_maps = #indexing_maps, iterator_types =

+["parallel", "parallel"] } func @example(%A: memref, %B:

+memref, %C: memref) { linalg.generic #attrs %A, %B, %C {

+^bb0(%a: f32, %b: f32, %c: f32): %d = addf %a, %b : f32 linalg.yield %d : f32 }:

+memref, memref, memref return } ```

+

+This function basically element-wise adds up two matrices (`%A` and `%B`) and

+stores the result into another one (`%C`).

+

+The property "*The Compute Payload is Specified With a Region*" is materialized

+by a lowering into a form that will resemble: ``` // Run: mlir-opt example3.mlir

+-convert-linalg-to-loops

+

+# indexing_maps = [

+

+affine_map<(i, j) -> (i, j)>, affine_map<(i, j) -> (i, j)>, affine_map<(i, j) ->

+(i, j)> ]

+

+# attrs = {

+

+args_in = 2, args_out = 1, indexing_maps = #indexing_maps, iterator_types =

+["parallel", "parallel"] } func @example(%A: memref, %B:

+memref, %C: memref) { linalg.generic #attrs %A, %B, %C {

+^bb0(%a: f32, %b: f32, %c: f32): %d = addf %a, %b : f32 linalg.yield %d : f32 }:

+memref, memref, memref return } ```

In the process of lowering to loops and lower-level constructs, similar

-requirements are encountered, as are discussed in the [inlined call op

-proposal](https://llvm.discourse.group/t/introduce-std-inlined-call-op-proposal/282/2).

-We expect to be able to reuse the common lower-level infrastructure provided

-it evolves to support both region arguments and captures.

+requirements are encountered, as are discussed in the

+[inlined call op proposal](https://llvm.discourse.group/t/introduce-std-inlined-call-op-proposal/282/2).

+We expect to be able to reuse the common lower-level infrastructure provided it

+evolves to support both region arguments and captures.

#### Property 5: May Map To an External Library Call

+

A `linalg.generic` op may map to an external library call by specifying a

-`SymbolAttr`. At this level of abstraction, the important glue is the ability

-to perform transformations that preserve the structure necessary to ***call

-the external library after different transformations have been applied***.

+`SymbolAttr`. At this level of abstraction, the important glue is the ability to

+perform transformations that preserve the structure necessary to ***call the

+external library after different transformations have been applied***.

-This involves considerations related to preservation of op semantics

-and integration at the ABI level. Regardless of whether one wants to use

-external library calls or a custom ISA, the problem for codegen is similar:

-preservation of a fixed granularity.

+This involves considerations related to preservation of op semantics and

+integration at the ABI level. Regardless of whether one wants to use external

+library calls or a custom ISA, the problem for codegen is similar: preservation

+of a fixed granularity.

-Consider the following example that adds an additional attribute `library_call="pointwise_add"`

-that specifies the name of an external library call we intend to use:

-```

-// File name: example4.mlir

-#indexing_maps = [

- affine_map<(i, j) -> (i, j)>,

- affine_map<(i, j) -> (i, j)>,

- affine_map<(i, j) -> (i, j)>

-]

-#attrs = {

- args_in = 2,

- args_out = 1,

- indexing_maps = #indexing_maps,

- iterator_types = ["parallel", "parallel"],

- library_call = "pointwise_add"

-}

-func @example(%A: memref, %B: memref, %C: memref) {

- linalg.generic #attrs %A, %B, %C {

- ^bb0(%a: f32, %b: f32, %c: f32):

- %d = addf %a, %b : f32

- linalg.yield %d : f32

- }: memref, memref, memref

- return

-}

-```

+Consider the following example that adds an additional attribute

+`library_call="pointwise_add"` that specifies the name of an external library

+call we intend to use: ``` // File name: example4.mlir

-The property "*Map To an External Library Call*" is

-materialized by a lowering into a form that will resemble:

+# indexing_maps = [

+

+affine_map<(i, j) -> (i, j)>, affine_map<(i, j) -> (i, j)>, affine_map<(i, j) ->

+(i, j)> ]

+

+# attrs = {

+

+args_in = 2, args_out = 1, indexing_maps = #indexing_maps, iterator_types =

+["parallel", "parallel"], library_call = "pointwise_add" } func @example(%A:

+memref, %B: memref, %C: memref) {

+linalg.generic #attrs %A, %B, %C { ^bb0(%a: f32, %b: f32, %c: f32): %d = addf

+%a, %b : f32 linalg.yield %d : f32 }: memref, memref,

+memref return } ```

+

+The property "*Map To an External Library Call*" is materialized by a lowering

+into a form that will resemble:

```

// Run: mlir-opt example4.mlir -convert-linalg-to-std

@@ -383,204 +376,138 @@

func @pointwise_add(memref, memref, memref) attributes {llvm.emit_c_interface}

```

-Which, after lowering to LLVM resembles:

-```

-// Run: mlir-opt example4.mlir -convert-linalg-to-std | mlir-opt -convert-std-to-llvm

-// Some generated code are omitted here.

-func @example(%arg0: !llvm<"float*">, ...) {

- ...

- llvm.call @pointwise_add(...) : (!llvm<"float*">, ...) -> ()

- return

-}

+Which, after lowering to LLVM resembles: ``` // Run: mlir-opt example4.mlir

+-convert-linalg-to-std | mlir-opt -convert-std-to-llvm // Some generated code

+are omitted here. func @example(%arg0: !llvm<"float*">, ...) { ... llvm.call

+@pointwise_add(...) : (!llvm<"float*">, ...) -> () return }

-llvm.func @pointwise_add(%arg0: !llvm<"float*">, ...) attributes {llvm.emit_c_interface} {

- ...

- llvm.call @_mlir_ciface_pointwise_add(%9, %19, %29) : (!llvm<"{ float*, float*, i64, [2 x i64], [2 x i64] }*">, !llvm<"{ float*, float*, i64, [2 x i64], [2 x i64] }*">, !llvm<"{ float*, float*, i64, [2 x i64], [2 x i64] }

-*">) -> ()

- llvm.return

-}

-llvm.func @_mlir_ciface_pointwise_add(!llvm<"{ float*, float*, i64, [2 x i64], [2 x i64] }*">, !llvm<"{ float*, float*, i64, [2 x i64], [2 x i64] }*">, !llvm<"{ float*, float*, i64, [2 x i64], [2 x i64] }*">) attributes {llvm.emit_c_interface}

-```

+llvm.func @pointwise_add(%arg0: !llvm<"float*">, ...) attributes

+{llvm.emit_c_interface} { ... llvm.call @_mlir_ciface_pointwise_add(%9, %19,

+%29) : (!llvm<"{ float*, float*, i64, [2 x i64], [2 x i64] }*">, !llvm<"{

+float*, float*, i64, [2 x i64], [2 x i64] }*">, !llvm<"{ float*, float*, i64, [2

+x i64], [2 x i64] } *">) -> () llvm.return } llvm.func

+@_mlir_ciface_pointwise_add(!llvm<"{ float*, float*, i64, [2 x i64], [2 x i64]

+}*">, !llvm<"{ float*, float*, i64, [2 x i64], [2 x i64] }*">, !llvm<"{ float*,

+float*, i64, [2 x i64], [2 x i64] }*">) attributes {llvm.emit_c_interface} ```

##### Convention For External Library Interoperability

+

The `linalg` dialect adopts a convention that is similar to `BLAS` when

-offloading operations to fast library implementations: pass a non-owning

-pointer to input and output data with additional metadata. This convention

-is also found in libraries such as `MKL`, `OpenBLAS`, `BLIS`, `cuBLAS`,

-`cuDNN`, etc.. and more generally at interface points across language

-boundaries (e.g. C++ / Python).

+offloading operations to fast library implementations: pass a non-owning pointer

+to input and output data with additional metadata. This convention is also found

+in libraries such as `MKL`, `OpenBLAS`, `BLIS`, `cuBLAS`, `cuDNN`, etc.. and

+more generally at interface points across language boundaries (e.g. C++ /

+Python).

-Generally, `linalg` passes non-owning pointers to View data structures

-to pre-compiled library calls linked externally.

+Generally, `linalg` passes non-owning pointers to View data structures to

+pre-compiled library calls linked externally.

-There is an [ongoing

-discussion](https://llvm.discourse.group/t/lowering-optional-attributes-in-linalg-structuredops-to-standard-dialect/333/3)

+There is an

+[ongoing discussion](https://llvm.discourse.group/t/lowering-optional-attributes-in-linalg-structuredops-to-standard-dialect/333/3)

on the topic of extending interoperability in the presence of key attributes.

#### Property 6: Perfectly Nested Writes To The Whole Output Operands

+

Perfectly nested loops form a particularly important class of structure that

enables key loop transformations such as tiling and mapping to library calls.

Unfortunately, this type of structure is easily broken by transformations such

as partial loop fusion. Tiling and mapping to library calls become more

-challenging, or even infeasible. Linalg ops adopt perfect-nestedness

-as a first-class property: the structure cannot be broken and is

-transported in the IR by construction.

+challenging, or even infeasible. Linalg ops adopt perfect-nestedness as a

+first-class property: the structure cannot be broken and is transported in the

+IR by construction.

A `linalg.generic` op represents a perfectly nested loop nest that writes the

-entire memory region. This is a structural constraint across regions and

-loops that has proven to be key in simplifying transformations.

+entire memory region. This is a structural constraint across regions and loops

+that has proven to be key in simplifying transformations.

-One particular point to mention is that converting imperfectly nested code

-into perfectly nested code can often be done with enough loop distribution

-and embedding of conditionals down to the innermost loop level.

+One particular point to mention is that converting imperfectly nested code into

+perfectly nested code can often be done with enough loop distribution and

+embedding of conditionals down to the innermost loop level.

Previous experience with Tensor Comprehensions gave us the intuition that

-forcing innermost control-flow nesting is a lot like writing data-parallel

-code with arrays of boolean values and predication.

-This type of trick has also been used before in polyhedral compilers to

-convert non-affine control into affine compute dependencies.

+forcing innermost control-flow nesting is a lot like writing data-parallel code

+with arrays of boolean values and predication. This type of trick has also been

+used before in polyhedral compilers to convert non-affine control into affine

+compute dependencies.

While it may be possible to automate such rewrites from generic IR,

`linalg.generic` just forces the semantics for now.

The key implication is that this conversion to deep predication needs to be

-undone once we are done with Linalg transformations.

-After iterators and induction variables are materialized (i.e. after lowering

-out of `linalg.generic` occurred), the overall performance will be greatly

-influenced by the quality of canonicalizations, foldings and *Loop Independent

-Code Motion* (LICM).

+undone once we are done with Linalg transformations. After iterators and

+induction variables are materialized (i.e. after lowering out of

+`linalg.generic` occurred), the overall performance will be greatly influenced

+by the quality of canonicalizations, foldings and *Loop Independent Code Motion*

+(LICM).

In the grander scheme, the reliance on late LICM was deemed a necessary risk.

#### Putting it Together

+

As it stands, the six properties above define the semantics of a

`linalg.generic` op. It is an open question whether all of these semantics are

strictly necessary in practice and whether some should or could be derived

-automatically while still maintaining the [core guiding

-principles](#guiding_principles).

+automatically while still maintaining the

+[core guiding principles](#guiding_principles).

For the time being, we have settled on the combination of these properties

because of empirical evidence building and working on multiple high-level

compilers. As we lay those down and engage more with the community, we expect

multiple rounds of discussions and design changes to the original architecture.

-### Tensors and Buffers: Conventions and Limitations

-

-Tensors are immutable SSA values, buffers are mutable regions of memory subject

-to side-effects and aliasing. As a consequence, output buffers are passed as

-operands whereas output tensors are new SSA values corresponding to op results.

-Inputs can be arbitrary tensors or buffers and are always passed as operands.

-

-The following convention is currently in-flight and is in the process of

-replacing other existing conventions. The following convention currently applies

-to "named" structured ops which are auto-generated by the linalg-ods tool.

-

-The convention adopted is as follows:

-

-1. A first block of `ins` op operands hold read-only inputs of ShapedType.

-2. An optional second block of `outs` op operands hold read-write output

- buffers of MemRefType.

-3. An optional third block of `init` operands hold initialization tensors of

- RankedTensorType. Such tensors can appear when the op performs a reduction

- and returns a tensor.

-

-Structured ops with fully parallel semantics, have empty `init`. They may either

-write in-place into `outs` buffers or return new tensors.

-

-Structured ops with reduction semantics and output tensor(s) however have

-additional restrictions:

-

-1. They can only return a single tensor for now.

-2. They cannot have any output buffer operand (i.e. `outs` is empty).

-3. They have exactly one `init` tensor of the same type as the unique output

- tensor. Such an `init` tensor does not have an explicit associate indexing

- map. Instead the map of the result tensor is used to signify that the `init`

- and the `result` are "tied".

-

-Points 1. and 2. keep complexity of the representation in check by allowing only

-a single result tensor, when reductions are present.

-

-Point 3. is related to the fact that SSA values cannot represent in-place

-updates. Instead, linalg adopts a similar convention that exists in e.g.

-`vector.outerproduct`: the value that is reduced into is passed as an explicit

-argument and a new result of the same shape is produced.

-

-It is expected buffer allocation will fold this last input onto the result in a

-single output buffer argument, which is why the same indexing map is required:

-the last input operand is said to be "tied" to the result.

-

-Alternative, more complex representations, would allow for:

-

-1. Multiple results and `init` tensors in arbitrary orders, which could be

- captured by an extra ArrayAttr of position pairs.

-2. Relaxing the conditions on the indexing map equalities on the each pair and

- e.g. allow implicit broadcasts of the input.

-

-These representations are deemed unnecessarily complex for now and are left for

-future discussion.

-

-As an illustration, the syntax for a `linalg.matmul` writing into a buffer is:

-

-```

-linalg.matmul ins(%a, %b : memref, tensor)

- outs(%c : memref)

-```

-

-, whereas the syntax for a `linalg.matmul` returning a new tensor is:

-

-```

-%d = linalg.matmul ins(%a, %b : tensor, memref)

- init(%c : tensor)

- -> tensor

-```

-

### Data Representation: Views

-The current implementation uses the [Strided MemRef (a.k.a View)](

-https://groups.google.com/a/tensorflow.org/forum/#!topic/mlir/MaL8m2nXuio)

+

+The current implementation uses the

+[Strided MemRef (a.k.a View)](https://groups.google.com/a/tensorflow.org/forum/#!topic/mlir/MaL8m2nXuio)

abstraction. The name *View* is used interchangeably in `linalg` to signify

-*Strided MemRef*.

-In the future we expect to use other structured data types and

+*Strided MemRef*. In the future we expect to use other structured data types and

support ragged, mixed-sparse and other types. We expect to draw on the

experience from existing LIFT abstractions for

-[sparse](https://www.lift-project.org/publications/2016/harries16sparse.pdf)

-and [position-dependent

-arrays](https://www.lift-project.org/publications/2019/pizzuti19positiondependentarrays.pdf).

+[sparse](https://www.lift-project.org/publications/2016/harries16sparse.pdf) and

+[position-dependent arrays](https://www.lift-project.org/publications/2019/pizzuti19positiondependentarrays.pdf).

### Metadata Ops

+

A set of ops that manipulate metadata but do not move memory. These ops take

-`view` operands + extra attributes and return new `view`s. The returned

-`view`s generally alias the operand `view`. At the moment the existing ops

-are:

+`view` operands + extra attributes and return new `view`s. The returned `view`s

+generally alias the operand `view`. At the moment the existing ops are:

- * `std.view`,

- * `std.subview`,

- * `std.transpose`.

- * `linalg.range`,

- * `linalg.slice`,

- * `linalg.reshape`,

+```

+* `std.view`,

+* `std.subview`,

+* `std.transpose`.

+* `linalg.range`,

+* `linalg.slice`,

+* `linalg.reshape`,

+```

Future ops are added on a per-need basis but should include:

- * `linalg.tile`,

- * `linalg.intersection`,

- * `linalg.convex_union`,

- * `linalg.difference` (would need to work on a list of views).

+```

+* `linalg.tile`,

+* `linalg.intersection`,

+* `linalg.convex_union`,

+* `linalg.difference` (would need to work on a list of views).

+```

These additional operations correspond to abstractions that have been known to

work in the field of large-scale distributed stencil computations.

-In a longer-term future, the abstractions from [Legion data-centric

-programming model](https://legion.stanford.edu/overview/) seem generally

-appealing.

+In a longer-term future, the abstractions from

+[Legion data-centric programming model](https://legion.stanford.edu/overview/)

+seem generally appealing.

### Named Payload-Carrying Ops

+

Additionally, `linalg` provides a small subset of commonly named operations:

- * `linalg.copy`,

- * `linalg.fill`,

- * `linalg.dot`,

- * `linalg.matmul`,

- * `linalg.conv`.

+```

+* `linalg.copy`,

+* `linalg.fill`,

+* `linalg.dot`,

+* `linalg.matmul`,

+* `linalg.conv`.

+```

These named operations adhere to the `linalg.generic` op interface. Work is in

progress to define declarative mechanisms to automatically generate named ops

@@ -608,7 +535,7 @@

1. The operations used to specify computations use EDSC intrinsics so that they

can easily be parsed and emitted into a simple region builder without

resorting to more general MLIR parsing.

-1. Reduction dimensions are specified with angle bracket notation on the

+1. Reduction dimensions are specified with angle bracket notation on the

operation they apply to (e.g. `std_add` specifies that `k` is a reduction

dimension). In TC, a reduction is specified with `op=` operator and the

reduction dimensions are inferred.

@@ -677,23 +604,24 @@

```

## Open Issues and Design Alternatives

-Multiple open issues and design alternatives are in flight and it is time to

-lay them out for the community to discuss and pick apart:

-1. Should `linalg.generic` support nesting?

-1. Should `linalg.generic` regions take views or only scalars?

-1. Should we try to solve automatic differentiation at this level of

-abstraction?

-1. Are all the six properties really necessary?

-1. Is this relying too much on declarative specification and would we be

-better off relying more on analyses?

-1. Is this general enough for the community's needs? If not how should this be

-extended, if at all?

-...

+

+Multiple open issues and design alternatives are in flight and it is time to lay

+them out for the community to discuss and pick apart:

+

+1. Should `linalg.generic` support nesting?

+1. Should `linalg.generic` regions take views or only scalars?

+1. Should we try to solve automatic differentiation at this level of

+ abstraction?

+1. Are all the six properties really necessary?

+1. Is this relying too much on declarative specification and would we be better

+ off relying more on analyses?

+1. Is this general enough for the community's needs? If not how should this be

+ extended, if at all? ...

These key questions (and much more) should be really thought of in the general

context of MLIR in which different levels of IR interoperate seamlessly. In

-practice, it is not necessary (or beneficial) to try and solve all problems in the

-same IR.

+practice, it is not necessary (or beneficial) to try and solve all problems in

+the same IR.

## Operations

diff --git a/mlir/include/mlir/Dialect/Linalg/Analysis/DependenceAnalysis.h b/mlir/include/mlir/Dialect/Linalg/Analysis/DependenceAnalysis.h

--- a/mlir/include/mlir/Dialect/Linalg/Analysis/DependenceAnalysis.h

+++ b/mlir/include/mlir/Dialect/Linalg/Analysis/DependenceAnalysis.h

@@ -45,19 +45,17 @@

class LinalgDependenceGraph {

public:

enum DependenceType { RAR = 0, RAW, WAR, WAW, NumTypes };

- struct LinalgOpView {

- Operation *op;

- unsigned operandIndex;

- };

+ // TODO: OpOperand tracks dependencies on buffer operands. Tensor result will

+ // need an extension to use OpResult.

struct LinalgDependenceGraphElem {

// dependentOpView may be either:

// 1. src in the case of dependencesIntoGraphs.

// 2. dst in the case of dependencesFromDstGraphs.

- LinalgOpView dependentOpView;

+ OpOperand *dependentOpView;

// View in the op that is used to index in the graph:

// 1. src in the case of dependencesFromDstGraphs.

// 2. dst in the case of dependencesIntoGraphs.

- LinalgOpView indexingOpView;

+ OpOperand *indexingOpView;

// Type of the dependence.

DependenceType dependenceType;

};

@@ -161,8 +159,8 @@

// Uses std::pair to keep operations and view together and avoid usage errors

// related to src/dst and producer/consumer terminology in the context of

// dependences.

- void addDependenceElem(DependenceType dt, LinalgOpView indexingOpView,

- LinalgOpView dependentOpView);

+ void addDependenceElem(DependenceType dt, OpOperand *indexingOpView,

+ OpOperand *dependentOpView);

/// Implementation detail for findCoveringxxx.

SmallVector

diff --git a/mlir/include/mlir/Dialect/Linalg/EDSC/Builders.h b/mlir/include/mlir/Dialect/Linalg/EDSC/Builders.h

--- a/mlir/include/mlir/Dialect/Linalg/EDSC/Builders.h

+++ b/mlir/include/mlir/Dialect/Linalg/EDSC/Builders.h

@@ -30,8 +30,8 @@

namespace edsc {

inline void defaultRegionBuilder(ValueRange args) {}

-/// Build a `linalg.generic` op with the specified `inputs`, `outputBuffers`,

-/// `initTensors`, `resultTensorsTypes` and `region`.

+/// Build a `linalg.generic` op with the specified `inputs`, `outputs`,

+/// `resultTensorsTypes` and `region`.

///

/// `otherValues` and `otherAttributes` may be passed and will be appended as

/// operands and attributes respectively.

@@ -41,15 +41,12 @@

///

/// 1. `inputs` may contain StructuredIndexed that capture either buffer or

/// tensor values.

-/// 2. `outputsBuffers` may contain StructuredIndexed that capture buffer

-/// values.

-/// 3. `initTensors` contain tensor values, without indexing maps.

-/// 4. `resultTensorTypes` may contain StructuredIndexed that capture return

-/// tensor types.

+/// 2. `outputs` may contain StructuredIndexed that capture either buffer or

+/// tensor values. In the future this will be extended with ranked shape values.

+/// 4. `resultTensorTypes` may contain return tensor types.

Operation *makeGenericLinalgOp(

ArrayRef iteratorTypes, ArrayRef inputs,

- ArrayRef outputBuffers, ArrayRef initTensors,

- ArrayRef resultTensorTypes,

+ ArrayRef outputs, TypeRange resultTensorTypes,

function_ref regionBuilder = defaultRegionBuilder,

ArrayRef otherValues = {}, ArrayRef otherAttributes = {});

diff --git a/mlir/include/mlir/Dialect/Linalg/IR/LinalgOps.h b/mlir/include/mlir/Dialect/Linalg/IR/LinalgOps.h

--- a/mlir/include/mlir/Dialect/Linalg/IR/LinalgOps.h

+++ b/mlir/include/mlir/Dialect/Linalg/IR/LinalgOps.h

@@ -9,7 +9,6 @@

#ifndef MLIR_DIALECT_LINALG_LINALGOPS_H_

#define MLIR_DIALECT_LINALG_LINALGOPS_H_

-#include "mlir/Dialect/Linalg/IR/LinalgTraits.h"

#include "mlir/Dialect/Linalg/IR/LinalgTypes.h"

#include "mlir/Dialect/StandardOps/IR/Ops.h"

#include "mlir/Dialect/Utils/StructuredOpsUtils.h"

@@ -111,9 +110,17 @@

void getDimsOfType(Operation *op, StringRef iteratorTypeName,

SmallVectorImpl &res);

+namespace detail {

+LogicalResult verifyStructuredOpInterface(Operation *op);

+} // namespace detail

} // namespace linalg

} // namespace mlir

+namespace mlir {

+namespace linalg {

+class IndexedGenericOp;

+} // namespace linalg

+} // namespace mlir

#include "mlir/Dialect/Linalg/IR/LinalgStructuredOpsInterfaces.h.inc"

#define GET_OP_CLASSES

diff --git a/mlir/include/mlir/Dialect/Linalg/IR/LinalgOps.td b/mlir/include/mlir/Dialect/Linalg/IR/LinalgOps.td

--- a/mlir/include/mlir/Dialect/Linalg/IR/LinalgOps.td

+++ b/mlir/include/mlir/Dialect/Linalg/IR/LinalgOps.td

@@ -98,6 +98,7 @@

OpBuilderDAG<(ins "ValueRange":$shape,

"ArrayRef":$staticShape, "Type":$elementType),

[{

+ InitTensorOp::inferResultType(staticShape, elementType).dump();

build($_builder, $_state,

InitTensorOp::inferResultType(staticShape, elementType),

shape, $_builder.getI64ArrayAttr(staticShape));

diff --git a/mlir/include/mlir/Dialect/Linalg/IR/LinalgStructuredOps.td b/mlir/include/mlir/Dialect/Linalg/IR/LinalgStructuredOps.td

--- a/mlir/include/mlir/Dialect/Linalg/IR/LinalgStructuredOps.td

+++ b/mlir/include/mlir/Dialect/Linalg/IR/LinalgStructuredOps.td

@@ -19,26 +19,6 @@

include "mlir/Interfaces/CopyOpInterface.td"

include "mlir/Interfaces/SideEffectInterfaces.td"

-// The Linalg `NInputs` trait provides the API for ops that are known

-// to have a specified number of inputs, all passed as operands.

-// See Linalg/LinalgTraits.h for implementation details and usage.

-class NInputs :

- NativeOpTrait<"linalg::NInputs<" # !cast(n) # ">::Impl"> {}

-

-// The Linalg `ZeroInitTensors` trait provides the API for ops that are known

-// to not have input tensor operands.

-// See Linalg/LinalgTraits.h for implementation details and usage.

-def ZeroInitTensors : NativeOpTrait<"linalg::ZeroInitTensors"> {}

-

-// The Linalg `NOutputs` trait provides the API for ops that are known

-// to have a specified number of outputs, all passed as operands.

-// See Linalg/LinalgTraits.h for implementation details and usage.

-class NOutputs :

- NativeOpTrait<"linalg::NOutputs<" # !cast(n) # ">::Impl"> {}

-

-def StructuredOpTraits : NativeOpTrait<"linalg::StructuredOpTraits">;

-def NamedStructuredOpTrait : NativeOpTrait<"linalg::NamedStructuredOpTrait">;

-

// Base Tablegen class for Linalg ops.

// Linalg ops that correspond to library calls operate on ShapedType as their

// first operands. These may be optionally followed by non-view operands

@@ -50,7 +30,6 @@

class LinalgStructured_Op props>

: LinalgStructuredBase_Op])> {

code libraryCallName = [{

std::string getLibraryCallName() {

@@ -65,12 +44,7 @@

//===----------------------------------------------------------------------===//

// At the moment these are not declarative and require a bunch of C++ code.

// In the future, these should be migrated to a declarative specification.

-def CopyOp : LinalgStructured_Op<"copy", [

- CopyOpInterface,

- NInputs<1>,

- ZeroInitTensors,

- NOutputs<1>

- ]> {

+def CopyOp : LinalgStructured_Op<"copy", [CopyOpInterface]> {

let description = [{

Copies the data in the input view into the output view.

@@ -137,6 +111,14 @@

}]>];

let extraClassDeclaration = libraryCallName # [{

+ ValueRange inputs() {

+ return OperandRange{getOperands().begin(), getOperands().begin() + 1};

+ }

+

+ ValueRange outputs() {

+ return OperandRange{getOperands().begin() + 1, getOperands().begin() + 2};

+ }

+

// Rank-polymorphic.

// filling_value -> O(ivs) with parallel iterators.

ArrayAttr iterator_types() {

@@ -170,14 +152,16 @@

let hasCanonicalizer = 1;

}

-def FillOp : LinalgStructured_Op<"fill", [

- NInputs<0>,

- ZeroInitTensors,

- NOutputs<1>]> {

-

+def FillOp : LinalgStructured_Op<"fill", []> {

let arguments = (ins AnyStridedMemRef:$output,

AnyTypeOf<[AnyFloat, AnySignlessInteger, AnyVector]>:$value);

let extraClassDeclaration = libraryCallName # [{

+ ValueRange inputs() { return {}; }

+

+ ValueRange outputs() {

+ return OperandRange{getOperands().begin(), getOperands().begin() + 1};

+ }

+

// Rank-polymorphic.

// filling_value -> O(ivs) with parallel iterators.

ArrayAttr iterator_types() {

@@ -276,13 +260,8 @@

}];

}

-def ConvOp : PoolingBase_Op<"conv", [

- NInputs<2>,

- // Despite having reductions, this manually defined ConvOp may only take

- // memref operands and can never have init tensors.

- ZeroInitTensors,

- NOutputs<1>]> {

-

+// Only support buffer semantics.

+def ConvOp : PoolingBase_Op<"conv", []> {

let description = [{

Generic n-D convolution as described in the TF documentation:

https://www.tensorflow.org/versions/r2.0/api_docs/python/tf/nn/convolution

@@ -313,6 +292,14 @@

OptionalAttr:$padding);

let extraClassDeclaration = commonUtils # [{

+ ValueRange inputs() {

+ return OperandRange{getOperands().begin(), getOperands().begin() + 2};

+ }

+

+ ValueRange outputs() {

+ return OperandRange{getOperands().begin() + 2, getOperands().begin() + 3};

+ }

+

// TODO: extend to support more than 1 dimensions and potentially grouping

// too.

unsigned getNumBatchDimensions() { return 1; }

@@ -335,6 +322,11 @@

// parallelized across; i.e. [zs] in the TF notation above whose number

// match `xs` (i.e. 1 window loop per "image" dimension).

// This may evolve in the future.

+ // Conditionally check nWin for cases of ill-formed op: this avoids

+ // overflows before hitting the verifier.

+ assert(nPar > getNumBatchDimensions() + getNumInputFeatureDimensions() &&

+ "expected at least one window dimension (i.e. memref ranks greater "

+ "than 2)");

unsigned nWin =

nPar - getNumBatchDimensions() - getNumInputFeatureDimensions();

SmallVector iters(nPar, getParallelIteratorTypeName());

@@ -352,7 +344,8 @@

ArrayAttr indexing_maps() {

MLIRContext *context = getContext();

auto nWin = getNumWindowLoops();

- assert(nWin > 0 && "expected at least one window dimension");

+ assert(nWin > 0 && "expected at least one window dimension (i.e. memref "

+ "ranks greater than 2)");

unsigned idx = 0;

// In the following, AffineDimExprs are indexed in loop order:

// [ b, xs, k, q, zs]

@@ -394,13 +387,9 @@

let hasCanonicalizer = 1;

}

+// Only support buffer semantics.

class SingleInputPoolingBase_Op

- : PoolingBase_Op,

- // Despite having reductions, this manually defined ConvOp may only take

- // memref operands and can never have init tensors.

- ZeroInitTensors,

- NOutputs<1>]> {

+ : PoolingBase_Op {

let description = [{

A base class for single input pooling function.

@@ -420,6 +409,14 @@

OptionalAttr:$padding);

let extraClassDeclaration = commonUtils# [{

+ ValueRange inputs() {

+ return OperandRange{getOperands().begin(), getOperands().begin() + 2};

+ }

+

+ ValueRange outputs() {

+ return OperandRange{getOperands().begin() + 2, getOperands().begin() + 3};

+ }

+

ArrayAttr iterator_types() {

// Outer parallel loops are always the number of output dimensions.

unsigned nPar = getOutputShapedType(0).getRank();

@@ -493,11 +490,9 @@

class GenericOpBase : LinalgStructuredBase_Op,

- NamedStructuredOpTrait,

SingleBlockImplicitTerminator<"YieldOp">]> {

let arguments = (ins Variadic:$inputs,

- Variadic:$output_buffers,

- Variadic:$init_tensors,

+ Variadic:$outputs,

AffineMapArrayAttr:$indexing_maps,

ArrayAttr:$iterator_types,

OptionalAttr:$doc,

@@ -622,34 +617,26 @@

```mlir

%C = linalg.generic #trait_attribute

ins(%A, %B : tensor, memref)

- init(%C : tensor)

+ outs(%C : tensor)

{other-optional-attributes}

{region}

-> (tensor)

```

-

- The `init` operand and the conventions around mixing tensors and buffers are

- described in more detail in the "Tensors and Buffers: Conventions and

- Limitations" section in the [Linalg Document](../docs/Linalg.md)

-

- Tensor values must be legalized by a buffer allocation pass before most

- transformations can be applied. Such legalizations move tensor return values

- into output buffer operands and updates the region arguments accordingly.

}];

let builders = [

OpBuilderDAG<(ins "TypeRange":$resultTensorTypes, "ValueRange":$inputs,

- "ValueRange":$outputBuffers, "ValueRange":$initTensors,

- "ArrayRef":$indexingMaps, "ArrayRef":$iteratorTypes,

- "StringRef":$doc, "StringRef":$libraryCall,

+ "ValueRange":$outputs, "ArrayRef":$indexingMaps,

+ "ArrayRef":$iteratorTypes, "StringRef":$doc,

+ "StringRef":$libraryCall,

CArg<"function_ref", "nullptr">)>,

OpBuilderDAG<(ins "ValueRange":$inputs, "ValueRange":$outputBuffers,

"ArrayRef":$indexingMaps, "ArrayRef":$iteratorTypes,

"StringRef":$doc, "StringRef":$libraryCall,

CArg<"function_ref", "nullptr">)>,

OpBuilderDAG<(ins "TypeRange":$resultTensorTypes, "ValueRange":$inputs,

- "ValueRange":$outputBuffers, "ValueRange":$initTensors,

- "ArrayRef":$indexingMaps, "ArrayRef":$iteratorTypes,

+ "ValueRange":$outputs, "ArrayRef":$indexingMaps,

+ "ArrayRef":$iteratorTypes,

CArg<"function_ref", "nullptr">)>,

OpBuilderDAG<(ins "ValueRange":$inputs, "ValueRange":$outputBuffers,

"ArrayRef":$indexingMaps, "ArrayRef":$iteratorTypes,

@@ -714,8 +701,8 @@

```mlir

linalg.indexed_generic #matmul_trait

- ins(%A, %B : memref,

- memref)

+ ins(%A, %B : memref,

+ memref)

outs(%C : memref) {

(%offset_m: index, %offset_n: index, %offset_k: index,

%a: f32, %b: f32, %c: f32) :

@@ -761,27 +748,19 @@

```mlir

%C = linalg.indexed_generic #trait_attribute

- ins(%A, %B : tensor, memref)

- init(%C : tensor)

+ ins(%A, %B : tensor, memref)

+ outs(%C : tensor)

{other-optional-attributes}

{region_with_index_arguments}

-> (tensor)

```

-

- The `init` operand and the conventions around mixing tensors and buffers are

- described in more detail in the "Tensors and Buffers: Conventions and

- Limitations" section in the [Linalg Document](../docs/Linalg.md)

-

- Tensor values must be legalized by a buffer allocation pass before most

- transformations can be applied. Such legalizations move tensor return values

- into output buffer operands and update the region arguments accordingly.

}];

let builders = [

OpBuilderDAG<(ins "TypeRange":$resultTensorTypes, "ValueRange":$inputs,

- "ValueRange":$outputBuffers, "ValueRange":$initTensors,

- "ArrayRef":$indexingMaps, "ArrayRef":$iteratorTypes,

- "StringRef":$doc, "StringRef":$libraryCall,

+ "ValueRange":$outputs, "ArrayRef":$indexingMaps,

+ "ArrayRef":$iteratorTypes, "StringRef":$doc,

+ "StringRef":$libraryCall,

CArg<"function_ref",

"nullptr">)>,

OpBuilderDAG<(ins "ValueRange":$inputs, "ValueRange":$outputBuffers,

@@ -790,8 +769,8 @@

CArg<"function_ref",

"nullptr">)>,

OpBuilderDAG<(ins "TypeRange":$resultTensorTypes, "ValueRange":$inputs,

- "ValueRange":$outputBuffers, "ValueRange":$initTensors,

- "ArrayRef":$indexingMaps, "ArrayRef":$iteratorTypes,

+ "ValueRange":$outputs, "ArrayRef":$indexingMaps,

+ "ArrayRef":$iteratorTypes,

CArg<"function_ref",

"nullptr">)>,

OpBuilderDAG<(ins "ValueRange":$inputs, "ValueRange":$outputBuffers,

diff --git a/mlir/include/mlir/Dialect/Linalg/IR/LinalgStructuredOpsInterface.td b/mlir/include/mlir/Dialect/Linalg/IR/LinalgStructuredOpsInterface.td

--- a/mlir/include/mlir/Dialect/Linalg/IR/LinalgStructuredOpsInterface.td

+++ b/mlir/include/mlir/Dialect/Linalg/IR/LinalgStructuredOpsInterface.td

@@ -20,6 +20,24 @@

def LinalgStructuredInterface : OpInterface<"LinalgOp"> {

let cppNamespace = "::mlir::linalg";

let methods = [

+ //===------------------------------------------------------------------===//

+ // Loop types handling.

+ //===------------------------------------------------------------------===//

+ InterfaceMethod<

+ /*desc=*/[{

+ Return the number of induction variables in the basic block. This should

+ always be 0 for index-free linalg ops. For IndexedGeneric, this must be

+ equal to numLoops

+ }],

+ /*retTy=*/"unsigned",

+ /*methodName=*/"getNumPayloadInductionVariables",

+ /*args=*/(ins),

+ /*methodBody=*/"",

+ /*defaultImplementation=*/[{

+ return isa(this->getOperation()) ?

+ $_op.getNumLoops() : 0;

+ }]

+ >,

//===------------------------------------------------------------------===//

// Loop types handling.

//===------------------------------------------------------------------===//

@@ -125,42 +143,40 @@

getNumIterators(getReductionIteratorTypeName(), iters) == 1;

}]>,

//===------------------------------------------------------------------===//

- // Num input/output/initTensors arguments handling.

+ // Num input/output arguments handling.

//===------------------------------------------------------------------===//

- // These special methods must be defined by each op that wants to implement

- // the LinalgStructuredInterface. For now, this is either:

- // - Explicitly specified in the op definition.

- // - Derived from variadic attributes (for "named" ops, linalg.generic and

- // linalg.indexed_generic ops).

+ // These special methods rely on `inputs` and `outputs` being defined by

+ // each op that wants to implement the LinalgStructuredInterface.

InterfaceMethod<

/*desc=*/[{

Return the number of inputs.

}],

/*retTy=*/"unsigned",

- /*methodName=*/"getNumInputs"

- >,

- InterfaceMethod<

- /*desc=*/[{

- Return the number of init tensors.

- }],

- /*retTy=*/"unsigned",

- /*methodName=*/"getNumInitTensors"

+ /*methodName=*/"getNumInputs",

+ /*args=*/(ins),

+ /*methodBody=*/"",

+ /*defaultImplementation=*/[{

+ return $_op.inputs().size();

+ }]

>,

InterfaceMethod<

/*desc=*/[{

Return the number of outputs.

}],

/*retTy=*/"unsigned",

- /*methodName=*/"getNumOutputs"

+ /*methodName=*/"getNumOutputs",

+ /*args=*/(ins),

+ /*methodBody=*/"",

+ /*defaultImplementation=*/[{

+ return $_op.outputs().size();

+ }]

>,

//===------------------------------------------------------------------===//

- // Input arguments handling.

+ // Input operands handling.

//===------------------------------------------------------------------===//

InterfaceMethod<

/*desc=*/[{

- Return the `i`-th input value.

- The `i^th` input argument is always the `i^th` operand regardless of

- whether we have tensors or buffers.

+ Return the `i`-th input operand.

}],

/*retTy=*/"Value",

/*methodName=*/"getInput",

@@ -173,24 +189,7 @@

>,

InterfaceMethod<

/*desc=*/[{

- Return the index of the given input value `v`, or `None` if the value is

- not an input.

- }],

- /*retTy=*/"llvm::Optional",

- /*methodName=*/"getIndexOfInput",

- /*args=*/(ins "Value":$value),

- /*methodBody=*/"",

- /*defaultImplementation=*/[{

- auto it = llvm::find(getInputs(), value);

- if (it != getInputs().end())

- return it - getInputs().begin();

- return llvm::None;

- }]

- >,

- InterfaceMethod<

- /*desc=*/[{

- Return the `i`-th input shaped type, irrespective of buffer or tensor

- type.

+ Return the `i`-th input shaped type

}],

/*retTy=*/"ShapedType",

/*methodName=*/"getInputShapedType",

@@ -202,7 +201,7 @@

>,

InterfaceMethod<

/*desc=*/[{

- Return the input operands.

+ Return the range of input operands.

}],

/*retTy=*/"Operation::operand_range",

/*methodName=*/"getInputs",

@@ -215,7 +214,19 @@

>,

InterfaceMethod<

/*desc=*/[{

- Return the range over the input operands that are of buffer type.

+ Return the OpOperands for the input operands.

+ }],

+ /*retTy=*/" MutableArrayRef",

+ /*methodName=*/"getInputOpOperands",

+ /*args=*/(ins),

+ /*methodBody=*/"",

+ /*defaultImplementation=*/[{

+ return this->getOperation()->getOpOperands().take_front(getNumInputs());

+ }]

+ >,

+ InterfaceMethod<

+ /*desc=*/[{

+ Return the subset of input operands that are of buffer type.

}],

/*retTy=*/"SmallVector",

/*methodName=*/"getInputBuffers",

@@ -223,417 +234,500 @@

/*methodBody=*/"",

/*defaultImplementation=*/[{

return llvm::to_vector<4>(llvm::make_filter_range(

- getInputs(), [](Value in){ return in.getType().isa(); }));

+ getInputs(), [](Value in){ return in.getType().template isa(); }));

}]

>,

InterfaceMethod<

/*desc=*/[{

- Return the subset of input operands that are of ranked tensor type.

+ Return the number of input buffer operands.

}],

- /*retTy=*/"SmallVector",

- /*methodName=*/"getInputTensorTypes" ,

+ /*retTy=*/"unsigned",

+ /*methodName=*/"getNumInputBuffers",

/*args=*/(ins),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- SmallVector res;

- for (Type type : getInputs().getTypes())

- if (auto t = type.template dyn_cast())

- res.push_back(t);

- return res;

+ return $_op.getInputBuffers().size();

}]

>,

- //===------------------------------------------------------------------===//

- // Output arguments handling.

- //===------------------------------------------------------------------===//

InterfaceMethod<

/*desc=*/[{

- Return the output buffer at the given index, asserts that this is a

- buffer operand and not a tensor result.

- The `i^th` output argument is an operand (resp. a return value) iff it

- is a value of buffer type (resp. a return value of tensor type).

+ Return the `index`^th input buffer.

}],

/*retTy=*/"Value",

- /*methodName=*/"getOutputBuffer",

- /*args=*/(ins "unsigned":$i),

+ /*methodName=*/"getInputBuffer",

+ /*args=*/(ins "unsigned":$index),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- // Output buffers are passed as output buffer operands (side-effecting).

- // Output tensors are results.

- // The union of the 2 are all the outputs and we want to ensure i does

- // not overflow the buffer operands.

- assert(i + this->getOperation()->getNumResults() < $_op.getNumOutputs()

- && "overflowing output buffer index");

- return this->getOperation()->getOperand($_op.getNumInputs() + i);

+ assert(index < getNumInputBuffers());

+ return getInputBuffers()[index];

}]

>,

InterfaceMethod<

/*desc=*/[{

- Return the index of the given buffer value, or `None` if the value is

- not part of the output buffers.

+ Return the subset of input operands that are of buffer type.

}],

- /*retTy=*/"llvm::Optional",

- /*methodName=*/"getIndexOfOutputBuffer",

- /*args=*/(ins "Value":$value),

+ /*retTy=*/"SmallVector",

+ /*methodName=*/"getInputBuffersOpOperands",

+ /*args=*/(ins),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- auto it = llvm::find(getOutputBuffers(), value);

- if (it != getOutputBuffers().end())

- return it - getOutputBuffers().begin();

- return llvm::None;

+ SmallVector res;

+ res.reserve(getNumInputs());

+ for (OpOperand &o : getInputOpOperands())

+ if (o.get().getType().isa())

+ res.push_back(&o);

+ return res;

}]

>,

InterfaceMethod<

/*desc=*/[{

- Return the type of the output buffer at the given index.

+ Return the subset of input operands that are of tensor type.

}],

- /*retTy=*/"MemRefType",

- /*methodName=*/"getOutputBufferType",

- /*args=*/(ins "unsigned":$i),

+ /*retTy=*/"SmallVector",

+ /*methodName=*/"getInputTensors",

+ /*args=*/(ins),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- return getOutputBuffer(i).getType().template cast();

- }]>,

+ return llvm::to_vector<4>(llvm::make_filter_range(

+ getInputs(),

+ [](Value in){ return in.getType().template isa(); }));

+ }]

+ >,

InterfaceMethod<

/*desc=*/[{

- Return the `i`-th output shaped type, irrespective of buffer or tensor

- type.

+ Return the subset of input operands that are of buffer type.

}],

- /*retTy=*/"ShapedType",

- /*methodName=*/"getOutputShapedType",

- /*args=*/(ins "unsigned":$i),

+ /*retTy=*/"SmallVector",

+ /*methodName=*/"getInputTensorsOpOperands",

+ /*args=*/(ins),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- return getShapedType(i + $_op.getNumInputs());

- }]>,

+ SmallVector res;

+ res.reserve(getNumInputs());

+ for (OpOperand &o : getInputOpOperands())

+ if (o.get().getType().isa())

+ res.push_back(&o);

+ return res;

+ }]

+ >,

InterfaceMethod<

/*desc=*/[{

- Return the results that are of ranked tensor type.

+ Return the types of the subset of input operands that are of buffer type.

}],

- /*retTy=*/"SmallVector",

- /*methodName=*/"getOutputTensorTypes",

+ /*retTy=*/"SmallVector",

+ /*methodName=*/"getInputBufferTypes" ,

/*args=*/(ins),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- SmallVector res;

- for (Type type : this->getOperation()->getResults().getTypes())

- res.push_back(type.template cast());

- return res;

- }]>,

+ return llvm::to_vector<4>(

+ llvm::map_range(

+ llvm::make_filter_range(

+ ValueRange(getInputs()).getTypes(),

+ [](Type in){ return in.isa(); }),

+ [](Type in){ return in.cast(); }));

+ }]

+ >,

InterfaceMethod<

/*desc=*/[{

- Return the output buffers (operands).

+ Return the types of the subset of input operands that are of ranked

+ tensor type.

}],

- /*retTy=*/"Operation::operand_range",

- /*methodName=*/"getOutputBuffers",

+ /*retTy=*/"SmallVector",

+ /*methodName=*/"getInputTensorTypes" ,

/*args=*/(ins),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- auto range = this->getOperation()->getOperands();

- return {range.begin() + $_op.getNumInputs(),

- range.begin() + getNumInputsAndOutputBuffers()};

+ return llvm::to_vector<4>(

+ llvm::map_range(

+ llvm::make_filter_range(

+ ValueRange(getInputs()).getTypes(),

+ [](Type in){ return in.isa(); }),

+ [](Type in){ return in.cast(); }));

}]

>,

//===------------------------------------------------------------------===//

- // Input and Output arguments handling.

+ // Output operands handling.

//===------------------------------------------------------------------===//

InterfaceMethod<

/*desc=*/[{

- Return one single buffer at position `$i`.

+ Return the `i`-th output operand.

}],

/*retTy=*/"Value",

- /*methodName=*/"getBuffer",

+ /*methodName=*/"getOutput",

/*args=*/(ins "unsigned":$i),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- assert(i < getNumInputsAndOutputBuffers() && "overflowing buffers index");

- return this->getOperation()->getOperand(i);

+ assert(i < $_op.getNumOutputs());

+ return this->getOperation()->getOperand(i + $_op.getNumInputs());

}]

>,

InterfaceMethod<

/*desc=*/[{

- Return the number of output buffers

+ Return the `i`-th output shaped type

}],

- /*retTy=*/"unsigned",

- /*methodName=*/"getNumOutputBuffers",

+ /*retTy=*/"ShapedType",

+ /*methodName=*/"getOutputShapedType",

+ /*args=*/(ins "unsigned":$i),

+ /*methodBody=*/"",

+ /*defaultImplementation=*/[{

+ return getOutput(i).getType().template cast();

+ }]

+ >,

+ InterfaceMethod<

+ /*desc=*/[{

+ Return the range of output operands.

+ }],

+ /*retTy=*/"Operation::operand_range",

+ /*methodName=*/"getOutputs",

/*args=*/(ins),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- return $_op.getNumOutputs() - this->getOperation()->getNumResults();

+ auto start =

+ this->getOperation()->getOperands().begin() + $_op.getNumInputs();

+ return {start, start + $_op.getNumOutputs()};

}]

>,

InterfaceMethod<

/*desc=*/[{

- Return the number of inputs and outputs, irrespective of their buffer or

- tensor type.

+ Return the OpOperands for the output operandss.

}],

- /*retTy=*/"unsigned",

- /*methodName=*/"getNumInputsAndOutputs",

+ /*retTy=*/" MutableArrayRef",

+ /*methodName=*/"getOutputOpOperands",

/*args=*/(ins),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- return $_op.getNumInputs() + $_op.getNumOutputs();

+ return this->getOperation()->getOpOperands().slice(

+ getNumInputs(), getNumOutputs());

}]

>,

InterfaceMethod<

/*desc=*/[{

- Return the number of inputs, irrespective of their buffer or tensor type

- and output buffers

+ Return the subset of output operands that are of buffer type.

}],

- /*retTy=*/"unsigned",

- /*methodName=*/"getNumInputsAndOutputBuffers",

+ /*retTy=*/"SmallVector",

+ /*methodName=*/"getOutputBuffers",

/*args=*/(ins),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- return $_op.getNumInputs() + $_op.getNumOutputs() -

- this->getOperation()->getNumResults();

+ return llvm::to_vector<4>(llvm::make_filter_range(

+ getOutputs(), [](Value in){ return in.getType().template isa(); }));

}]

>,

InterfaceMethod<

/*desc=*/[{

- Return the range over inputs (irrespective of type) and output buffers.

+ Return the `index`^th output buffer.

}],

- /*retTy=*/"Operation::operand_range",

- /*methodName=*/"getInputsAndOutputBuffers",

+ /*retTy=*/"Value",

+ /*methodName=*/"getOutputBuffer",

+ /*args=*/(ins "unsigned":$index),

+ /*methodBody=*/"",

+ /*defaultImplementation=*/[{

+ assert(index < getNumOutputBuffers());

+ return getOutputBuffers()[index];

+ }]

+ >,

+ InterfaceMethod<

+ /*desc=*/[{

+ Return the subset of output operands that are of buffer type.

+ }],

+ /*retTy=*/"SmallVector",

+ /*methodName=*/"getOutputBuffersOpOperands",

/*args=*/(ins),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- auto range = this->getOperation()->getOperands();

- return {range.begin(), range.begin() + getNumInputsAndOutputBuffers()};

+ SmallVector res;

+ res.reserve(getNumOutputs());

+ for (OpOperand &o : getOutputOpOperands())

+ if (o.get().getType().isa())

+ res.push_back(&o);

+ return res;

}]

>,

InterfaceMethod<

/*desc=*/[{

- Return the range over init tensors.

+ Return the number of output buffer operands.

}],

- /*retTy=*/"Operation::operand_range",

- /*methodName=*/"getInitTensors",

+ /*retTy=*/"unsigned",

+ /*methodName=*/"getNumOutputBuffers",

/*args=*/(ins),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- auto range = this->getOperation()->getOperands();

- auto base = range.begin() + getNumInputsAndOutputBuffers();

- return {base, base + $_op.getNumInitTensors()};

+ return $_op.getOutputBuffers().size();

}]

>,

InterfaceMethod<

/*desc=*/[{

- Return one single init tensor at position `$i`.

+ Return the subset of output operands that are of tensor type.

}],

- /*retTy=*/"Value",

- /*methodName=*/"getInitTensor",

- /*args=*/(ins "unsigned":$i),

+ /*retTy=*/"SmallVector",

+ /*methodName=*/"getOutputTensors",

+ /*args=*/(ins),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- assert(i < $_op.getNumInitTensors() && "overflowing init tensor index");

- return getInitTensors()[i];

+ return llvm::to_vector<4>(llvm::make_filter_range(

+ getOutputs(),

+ [](Value in){ return in.getType().template isa(); }));

}]

>,

InterfaceMethod<

/*desc=*/[{

- Return true if the shaped operand index `i` is the index of an init

- tensor.

+ Return the subset of output operands that are of tensor type.

}],

- /*retTy=*/"bool",

- /*methodName=*/"isIndexOfAnInitTensor",

- /*args=*/(ins "unsigned":$i),

+ /*retTy=*/"SmallVector",

+ /*methodName=*/"getOutputTensorsOpOperands",

+ /*args=*/(ins),

/*methodBody=*/"",

/*defaultImplementation=*/[{

- assert(i < $_op.getNumShapedOperands() && "overflowing shaped operand index");

- return i >= $_op.getNumInputs() + getNumOutputBuffers();

+ SmallVector res;

+ res.reserve(getNumOutputs());

+ for (OpOperand &o : getOutputOpOperands())

+ if (o.get().getType().isa())